From Theory to Reality: What it Really Means to Deploy Generative AI in Production

"It's just integrating an AI API," they said... and thus began a learning journey that every engineer should know about. The uncomfortable truth about Generative AI in production. 💡

After spending time working with teams trying to deploy Generative AI solutions to production, I've learned that reality differs significantly from what tutorials and blog posts suggest. Let me share some uncomfortable truths and lessons learned:

🎯 The Truth About Hallucinations

It's not just that models "hallucinate" - they do so in surprisingly creative ways. In a customer service project, the model started inventing return policies that never existed, with such detail and confidence that even some sales agents believed them to be true.

Key Learning: Implement ground truth systems where each response must specifically cite verified internal documents. It's not perfect, but it can reduce hallucinations by around 90%.

💡 What Actually Works:

Maintain a database of problematic responses and their corrections

Create prompts that require specific references to the company's knowledge base

Establish human verification systems for critical cases

⚠️ The True Cost of Non-Determinism

"The model sometimes responds differently" sounds innocent until you're facing an angry customer who received two contradictory answers in less than an hour. This isn't just a technical problem - it's a business trust issue.

In any business, variations in responses related to terms and conditions of a service can cost you the trust of a key client. The solution often isn't technical, but procedural. Many times, you don't need the model to answer by itself, but rather to understand what the user wants and know where to find it.

Develop a set of pre-approved responses

Implement a response versioning system

Create clear protocols for escalating ambiguous cases that require timely human intervention

🔍 The Reality of Evaluation

Let's forget complex metrics for a moment. What really matters is: Is it solving the user's problem? You might have excellent accuracy metrics, but the right question is: Are users actually satisfied?

What Really Matters:

Time to effective problem resolution

Rate of human intervention needed

Real user satisfaction (not just automated metrics)

💰 The Real Economics of AI in Production

Let me be direct: costs can spiral in unexpected ways.

Depending on your system's complexity, a logic error could cause infinite loops or multiple retry attempts trying to achieve a particular goal. For instance, a system might get stuck trying to process a failed request, consuming resources with each attempt without a clear exit condition.

In systems using retrieval-augmented generation (RAG) to enrich model responses, teams often overlook the additional token costs from documents concatenated to the prompt. A simple query that should cost 1000 tokens can end up consuming far more by including entire documents instead of relevant fragments.

Be very careful with users who spend more time than necessary interacting with your system. While this might be fine for consumer applications, at the enterprise level, AI should solve specific business-related problems, not provide entertainment or serve as a general-purpose agent.

🛠️ Effective Control Strategies:

Implement strict retry limits (maximum 3-5 attempts)

Set appropriate timeouts for each operation

Monitor and alert on abnormal retry patterns

Implement circuit breakers to prevent cascade failures

Limit the number of documents retrieved per query

Implement relevancy filters before sending content to the model

Use context compression techniques

Set clear conversation turn limits (e.g., maximum 5 interactions per query)

Implement session timeouts (e.g., 5 minutes of inactivity)

Define clear objectives for each type of interaction

Create goal-oriented conversational flows

🔒 Security: Beyond Policy Compliance

Security isn't just about GDPR or HIPAA compliance. It's understanding that every prompt is a potential vulnerability. A clever user can achieve prompt injection and completely alter your system's behavior.

Strategies That Actually Work:

Aggressive input sanitization (Guardrails)

Prompt injection pattern monitoring. Similar to antivirus software, maintain a database of known patterns and check for similarities between these patterns and user messages

Real-time anomaly detection systems. While analyzing user input is important, we must also review outputs. Monitoring model-generated responses can help detect if our system has been compromised by identifying changes in the model's usual behavior

⚡ The Human Factor

The most challenging part isn't the technology - it's the people. Teams that need to adapt, users expecting magic, stakeholders wanting immediate results.

Critical Learnings:

Involve end users from day one

Set realistic expectations with all stakeholders

Create continuous training programs

💭 Final Reflection: Beyond the Models

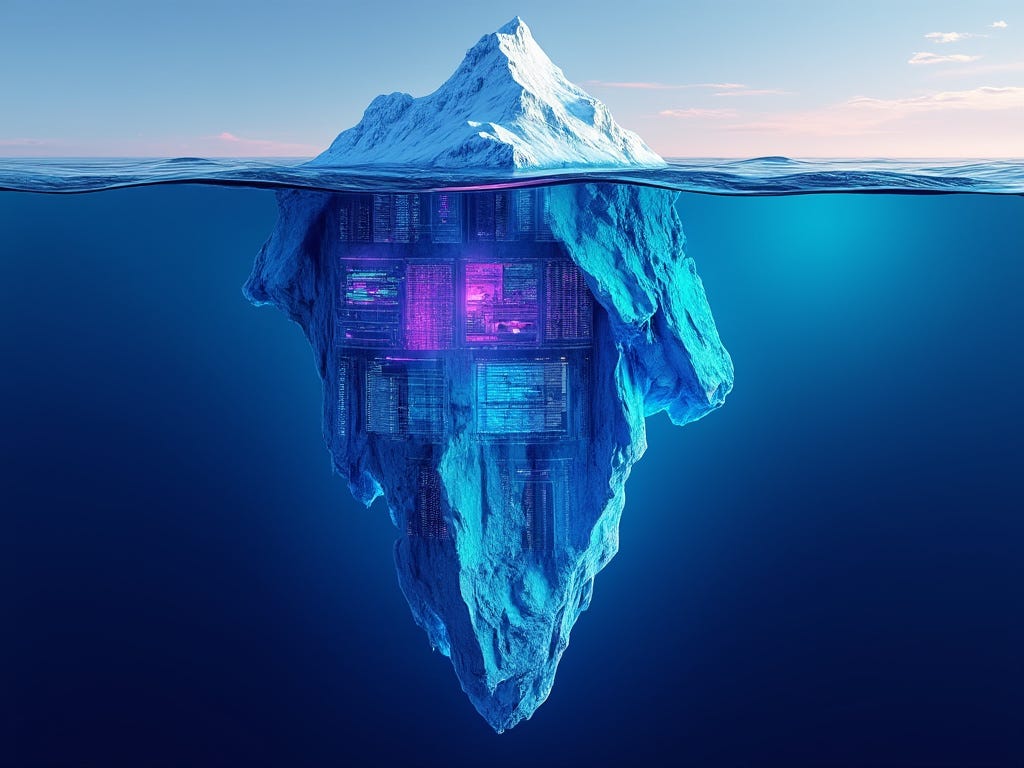

Implementing these AI systems in production has led us to a fundamental truth: an LLM is indeed a powerful tool, but it requires an entire orchestration of systems and processes around it to generate real value.

The challenges we face aren't so different from those software engineering has faced for decades: scalability, maintainability, security, monitoring, and resource optimization. The difference lies in how these challenges now intertwine with the probabilistic and sometimes unpredictable nature of language models.

The real magic isn't in the model itself, but in the engineering that surrounds it. It's the monitoring systems that detect anomalous behaviors, the control mechanisms that prevent exorbitant costs, the data pipelines that keep knowledge up to date, and the evaluation systems that ensure response quality. It's pure, hard engineering work.

The success of an AI system in production depends less on the chosen model and more on the solidity of its architecture, the robustness of its control systems, and the meticulousness of its implementation. Software engineers are the true architects of these systems, building the foundations upon which models can operate safely, efficiently, and effectively.

Implementing AI systems in production isn't just a technical challenge - it's a humbling exercise. Each week we learn something new, each error teaches us, and each success reminds us how much we still have to learn. But above all, it reminds us that true innovation isn't about using the latest model version, but about building robust systems that transform that potential into real and sustainable value.